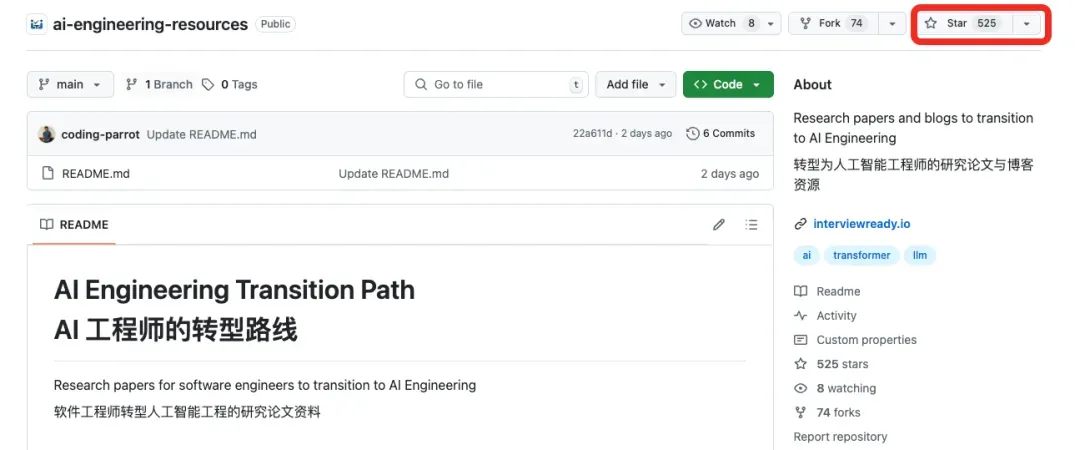

项目地址:https://github.com/InterviewReady/ai-engineering-resources

Tokenization 分词处理

- Byte-pair Encoding https://arxiv.org/pdf/1508.07909

- Byte Latent Transformer: Patches Scale Better Than Tokens https://arxiv.org/pdf/2412.09871

Vectorization 向量化处理

-

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding https://arxiv.org/pdf/1810.04805

-

IMAGEBIND: One Embedding Space To Bind Them All https://arxiv.org/pdf/2305.05665

-

SONAR: Sentence-Level Multimodal and Language-Agnostic Representations https://arxiv.org/pdf/2308.11466

-

FAISS library https://arxiv.org/pdf/2401.08281

-

Facebook Large Concept Models https://arxiv.org/pdf/2412.08821v2

Infrastructure 基础设施

-

TensorFlow https://arxiv.org/pdf/1605.08695

-

Deepseek filesystem https://github.com/deepseek-ai/3FS/blob/main/docs/design_notes.md

-

Milvus DB https://www.cs.purdue.edu/homes/csjgwang/pubs/SIGMOD21_Milvus.pdf

-

Billion Scale Similarity Search : FAISS https://arxiv.org/pdf/1702.08734

Core Architecture 核心架构

-

Attention is All You Need https://papers.neurips.cc/paper/7181-attention-is-all-you-need.pdf

-

FlashAttention https://arxiv.org/pdf/2205.14135

-

Multi Query Attention https://arxiv.org/pdf/1911.02150

-

Grouped Query Attention https://arxiv.org/pdf/2305.13245

-

Google Titans outperform Transformers https://arxiv.org/pdf/2501.00663

-

VideoRoPE: Rotary Position Embedding https://arxiv.org/pdf/2502.05173

Mixture of Experts 专家混合模型(MoE)

-

Sparsely-Gated Mixture-of-Experts Layer https://arxiv.org/pdf/1701.06538

-

Switch Transformers https://arxiv.org/abs/2101.03961

RLHF 基于人类反馈的强化学习

-

Deep Reinforcement Learning with Human Feedback https://arxiv.org/pdf/1706.03741

-

Fine-Tuning Language Models with RHLF https://arxiv.org/pdf/1909.08593

-

Training language models with RHLF https://arxiv.org/pdf/2203.02155

Chain of Thought 思维链

-

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models https://arxiv.org/pdf/2201.11903

-

Chain of thought https://arxiv.org/pdf/2411.14405v1/

-

Demystifying Long Chain-of-Thought Reasoning in LLMs https://arxiv.org/pdf/2502.03373

Reasoning 推理

-

Transformer Reasoning Capabilities https://arxiv.org/pdf/2405.18512

-

Large Language Monkeys: Scaling Inference Compute with Repeated Sampling https://arxiv.org/pdf/2407.21787

-

Scale model test times is better than scaling parameters https://arxiv.org/pdf/2408.03314

-

Training Large Language Models to Reason in a Continuous Latent Space https://arxiv.org/pdf/2412.06769

-

DeepSeek R1 https://arxiv.org/pdf/2501.12948v1

-

A Probabilistic Inference Approach to Inference-Time Scaling of LLMs using Particle-Based Monte Carlo Methods https://arxiv.org/pdf/2502.01618

-

Latent Reasoning: A Recurrent Depth Approach https://arxiv.org/pdf/2502.05171

-

Syntactic and Semantic Control of Large Language Models via Sequential Monte Carlo https://arxiv.org/pdf/2504.13139

Optimizations 优化方案

-

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits https://arxiv.org/pdf/2402.17764

-

FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision https://arxiv.org/pdf/2407.08608

-

ByteDance 1.58 https://arxiv.org/pdf/2412.18653v1

-

Transformer Square https://arxiv.org/pdf/2501.06252

-

Inference-Time Scaling for Diffusion Models beyond Scaling Denoising Steps https://arxiv.org/pdf/2501.09732

-

1b outperforms 405b https://arxiv.org/pdf/2502.06703

-

Speculative Decoding https://arxiv.org/pdf/2211.17192

Distillation 蒸馏

-

Distilling the Knowledge in a Neural Network https://arxiv.org/pdf/1503.02531

-

BYOL - Distilled Architecture https://arxiv.org/pdf/2006.07733

SSMs 状态空间模型

-

RWKV: Reinventing RNNs for the Transformer Era https://arxiv.org/pdf/2305.13048

-

Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality https://arxiv.org/pdf/2405.21060

-

Distilling Transformers to SSMs https://arxiv.org/pdf/2408.10189

-

LoLCATs: On Low-Rank Linearizing of Large Language Models https://arxiv.org/pdf/2410.10254

-

Think Slow, Fast https://arxiv.org/pdf/2502.20339

Competition Models 竞赛模型

-

Google Math Olympiad 2 https://arxiv.org/pdf/2502.03544

-

Competitive Programming with Large Reasoning Models https://arxiv.org/pdf/2502.06807

-

Google Math Olympiad 1 https://www.nature.com/articles/s41586-023-06747-5

Hype Makers

-

Can AI be made to think critically https://arxiv.org/pdf/2501.04682

-

Evolving Deeper LLM Thinking https://arxiv.org/pdf/2501.09891

-

LLMs Can Easily Learn to Reason from Demonstrations Structure https://arxiv.org/pdf/2502.07374

Hype Breakers

-

Separating communication from intelligence https://arxiv.org/pdf/2301.06627

-

Language is not intelligence https://gwern.net/doc/psychology/linguistics/2024-fedorenko.pdf

Image Transformers 图像转换器

-

Image is 16x16 word https://arxiv.org/pdf/2010.11929

-

deepseek image generation https://arxiv.org/pdf/2501.17811

Video Transformers 视频转换器

-

ViViT: A Video Vision Transformer https://arxiv.org/pdf/2103.15691

-

Joint Embedding abstractions with self-supervised video masks https://arxiv.org/pdf/2404.08471

-

Facebook VideoJAM ai gen https://arxiv.org/pdf/2502.02492

Case Studies 案例分析

- Automated Unit Test Improvement using Large Language Models at Meta https://arxiv.org/pdf/2402.09171

- Retrieval-Augmented Generation with Knowledge Graphs for Customer Service Question Answering https://arxiv.org/pdf/2404.17723v1

- OpenAI o1 System Card https://arxiv.org/pdf/2412.16720

- LLM-powered bug catchers https://arxiv.org/pdf/2501.12862

- Chain-of-Retrieval Augmented Generation https://arxiv.org/pdf/2501.14342

- Swiggy Search https://bytes.swiggy.com/improving-search-relevance-in-hyperlocal-food-delivery-using-small-language-models-ecda2acc24e6

- Swarm by OpenAI https://github.com/openai/swarm

- Netflix Foundation Models https://netflixtechblog.com/foundation-model-for-personalized-recommendation-1a0bd8e02d39

- Model Context Protocol https://www.anthropic.com/news/model-context-protocol

- uber queryGPT https://www.uber.com/en-IN/blog/query-gpt/